NewsEyen blogia kirjoittavat tiimimme jäsenet. Blogissa tuodaan terveisiä konferensseista, pohdiskellaan ajankohtaisia asioita, kerrotaan projektin sisäisiä uutisia sekä esitellään tiivistä tietoa tapaustutkimuksistamme tai projektiin liittyvistä julkaisuista. Blogikirjoitukset ovat pääosin englanninkielisiä, mutta voivat silloin tällöin sisältää muutakin kirjoittajan suosimaa kieltä – olemmehan monikielinen joukko! Mukavia lukuhetkiä!

Bringing together what belongs together: Thematic grouping of newspaper clippings using LDA and JSD

Return migrants, returning emigrants, returning home, returning families, returnees, return of emigrants, return of prisoners of war, returning back, returning to Austria… this is a list that can easily be expanded. Even if it were possible to cover the topic of return migration in its entirety, many of the word combinations would lead to results that are not relevant.

The German language, with its countless possibilities of word-flexions and bi-grams, complicates the task. While lemmatisation[1] can reduce the challenges of word-flexions, it seems to be a futile undertaking to try to find all possible combinations of words on this vague and yet specific topic. On the other hand, expanding the search by avoiding word combinations and using only typical words in a collection, such as return or returning on return migration, leads to a considerable number of irrelevant articles, so called ‘false positives’.

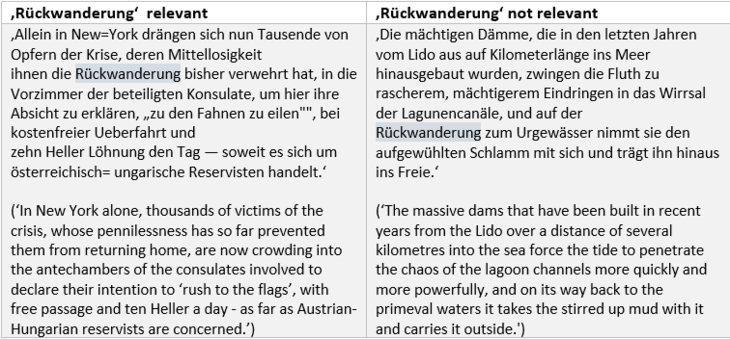

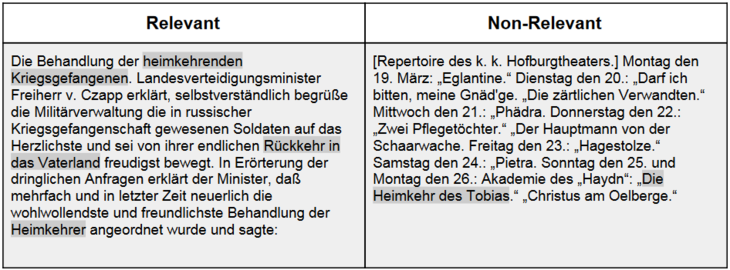

The following extracts show, for example, how different the contexts of the word Rückwanderung (an ambiguous term for return migration) can be:

When building topic-specific text-corpora using queries, there is always a compromise between precision and recall.[2] In other words, there is a conflict between creating a corpus that contains only relevant texts but not all relevant texts available in the entire collection and, on the other hand, creating a corpus that contains all available relevant texts, but at the expense of including many irrelevant texts (Gabrielatos 2007 and Chowdhury 2010).

Methods like Relative Query Term Relevance (RQTR) - a technique to calculate the degree of precision and recall of a query - may be a great help in creating specialised corpora, but they also have their limits. The RQTR method is based on the combination of keywords (candidate term and core query) calculating the relevance of the candidate term (e.g. the relevance of the term Hamas for the topic on refugees). If a candidate term has a clear positive RQTR score, it can add more relevant texts to a corpus. If the score is negative, it would add too much noise to a corpus (Gabrielatos 2007). This approach can also be adapted to reduce the issue of polysemous (that is multi-part) query terms, as Daniel Malone (2020) suggested at the Corpora and Discourse International Conference 2020. In this case the RQTR method was used to find good keywords which were combined with an ambiguous search term to reduce the number of irrelevant articles. This process, however, is highly influenced by the researcher’s choice of candidate terms. Using the context of a search query (in this case the whole content of a newspaper clipping where the keyword appears) to measure the relevance, on the other hand, can make the search less influenced by the researcher's prior knowledge and avoid a too narrow tunnel vision. The question therefore is: Are text mining methods able to distinguish between relevant articles on return migration and non-relevant articles when their only common denominator is the keyword return*?

The experiment

Creating training and testing corpora / preprocessing the data

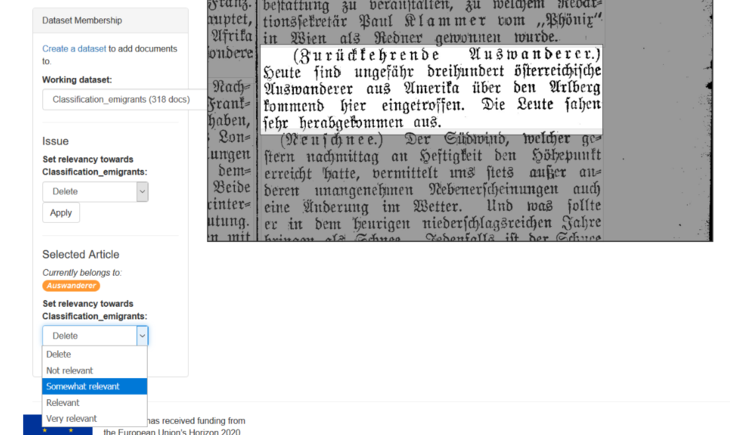

In order to enable machine learning, a manually annotated collection - which is representative for the topic - containing relevant as well as non relevant articles was created. This step was performed with the beta version of the NewsEye platform. With the help of the dataset function of the platform, the creation of such a collection is a simple and fast process.

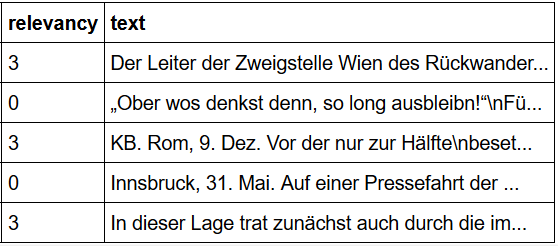

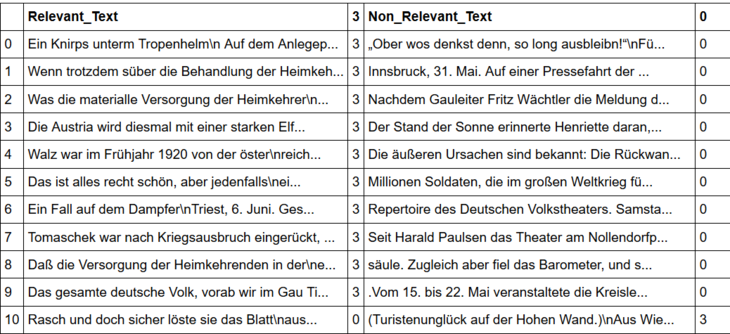

To build this collection, ambiguous keywords like Heimkehr (returning home), Rückwanderung (return migration) and Heimat zurückkehren (returning home) as well as unambiguous keywords like Heimkehrer (returnee) or Rückwanderer (returnee) were used. Found articles were first saved to a dataset in the workspace and then manually classified. Relevant articles were given the number three, irrelevant articles the number zero.

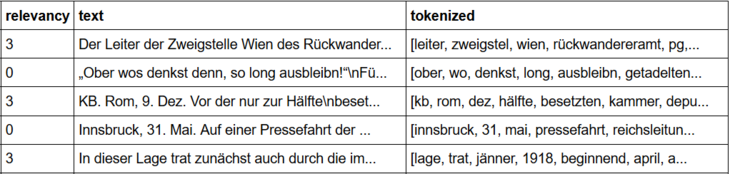

For the training and testing, a collection of 216 article clippings was created manually. After the export of this collection, a Jupyter notebook was used as an environment to combine human-readable narrative with computer-readable code. Using the Python packages Gensim and SpaCy, the collection was preprocessed, i.e. stop words and punctuations were removed, words were stemmed and tokenised[3]. In addition, article clippings that contained less than 30 tokens were removed, since Topic Modeling does not work very well with shorter documents.

The collection was then divided into a training corpus and a test corpus, using a mask of binary values. This made it possible to have a good mix of relevant and non relevant articles in each of the corpora. The training corpus was then used to train the Topic Modeling algorithm, the test corpus to retrieve similar articles in a later step.

Using Topic Modeling and Jensen Shannon Distance to group similar articles

Topic Modeling (LDA) which automatically detects word and phrase patterns is used for various needs: understanding given topics in a corpus, getting a better insight into the type or genre of documents in a corpus (news, advertisement, etc.), capturing the evolution of topics and trends within multilingual collections (Zosa and Ganroth-Wilding, 2019), or finding the most similar documents from a corpus. Topic Modeling is an unsupervised machine learning technique that automatically clusters word groups and similar expressions that best characterize a set of documents. Therefore, it is well suited for the classification of relevant and non-relevant texts using similarity measures.

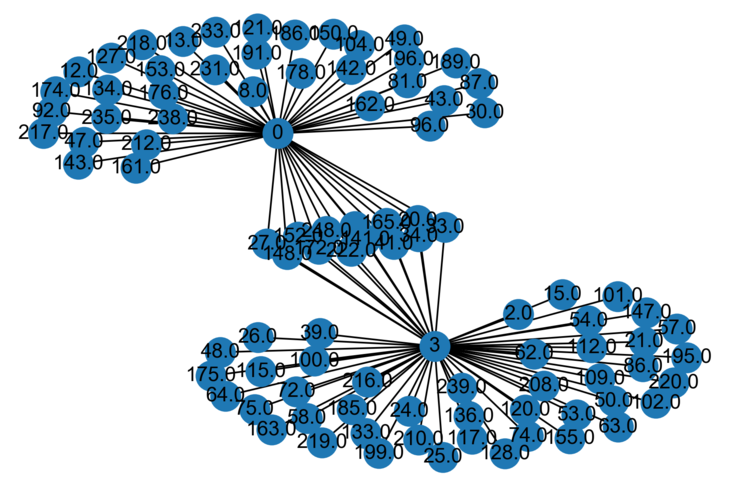

Firstly, LDA was used to calculate the topic distribution of each article clipping in the training corpus. Experiments have shown that a high number of topics (250 in this case) leads to the better results. In order to see how well the dominant topics are separated between relevant (3) and non-relevant (0) articles, a network visualization was plotted using the Python packages Pandas and Networkx:

Since each document was trained to be only represented by a small number of topics (= low alpha), it was assumed that the classification of the article clippings could work just as well as the separation in the network graph, let’s see.

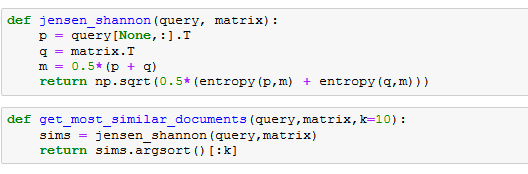

The generated topic distributions of each article clipping from the training corpus served as a comparison for other, unseen articles from the test corpus, in order to automatically distinguish between relevant and non-relevant articles. For the comparison, the Jensen-Shannon Distance method (JSD) was used to measure the similarity between the topic distribution of an unseen article and the topic distribution of the training corpus. What the Jensen-Shannon distance is measuring, is which documents are statistically "closer" (and therefore more alike) by comparing the divergence of their topic distributions. The smaller the distance, the more similar two articles are.

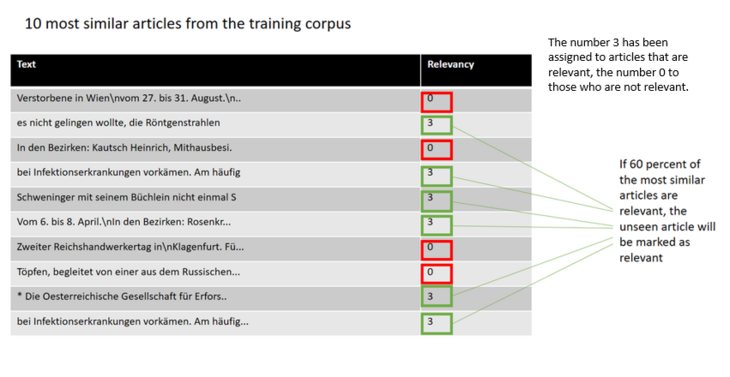

As a consequence, the topic distribution of each new article (from the test corpus) was compared to the topic distribution of all articles in the trained corpus. Then, for each unseen article, the 10 most similar articles from the training corpus were extracted. These articles carry the information about the manually assigned relevancy. If 60 percent of the automatically found similar articles were annotated as relevant, the new article was marked as relevant, too. Otherwise it was marked as irrelevant.

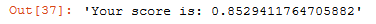

Classifying the full collection into relevant and non relevant newspaper clippings

Since the results for the test corpus were satisfying, the method was used for the entire collection on return migration. As this collection no longer contained the information on the relevancy of the newspaper clippings, the results could not be automatically verified. However, random checks of the classified texts again proved that the method had worked surprisingly well. The following example shows that the newspaper clipping on the return of prisoners of war was correctly selected as relevant and the newspaper clipping about Joseph Haydn’s oratorio The return of Tobias was correctly selected as non-relevant:

Conclusion

Even though Topic Modeling is generally used for the purpose of finding thematic clusters, i.e. entry points to a collection, its benefit for classification processes is considerable, as the example here shows. The success of classification approaches is easy to measure and the error rate is controllable. In order to be able to use this method for newspaper clippings, article separation is a must. Methods for good, automated article separation are, however, still under development (Weidemann et all., 2019). Once available, they will open the door for a new era of corpus analysis. The NewsEye project will make a huge step in this direction, using similarity measures to support article separation as well.

Footnotes

[1] The process of grouping together the inflected forms of a word so they can be analysed as a single item, identified by the word's lemma, or dictionary form.

[2] Recall is the number of relevant documents found by a search divided by the total number of relevant documents present, while Precision is the number of relevant documents found by a search divided by the total number of documents found by that search.

[3] breaking text into individual linguistic units.